ImageRegistration with Volume Data, poor convergence

Hi all,

I have some scanning probe time lapse image data with typical drift between images, where I would like to register the images via translation only and then subtract the images to get quantify the height changes locally.

ImageRegistration seems like the proper function to achieve the image registration. I see that the algorithm is intolerant of large translational offsets, so I manually translate close to registration.

ImageTransform/IOFF={109,-49,0} offsetImage neg26C_40C_start

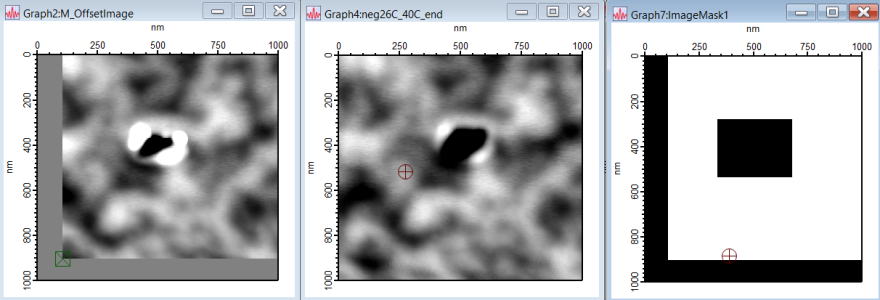

There are large Z-offsets between images so I added offsets so they are at ~the same average Z height. There is also a feature in each image that changes a lot (this is the interesting part), so I created a mask to hide this feature along with the cutoff areas from manually translating one of the images. I attach a picture to show the translated image, the reference image (both images on same -10nm to +10nm height scale), and the mask. The mask is all zeros for the masked out portions and just the topo data from the translated image everywhere else. I actually have 2 masks (one for test, one for ref image), but they are identical for simplicity; there should be enough unmasked data in both images to achieve registration, in my opinion anyway.

I tried achieving registration with the following command

ImageRegistration/CONV=1/TRNS={1,1,1}/ROT={0,0,0}/REFM=1/TSTM=1 testMask=ImageMask1 refMask=ImageMask2 testWave=M_OffsetImage, refWave=neg26C_40C_end

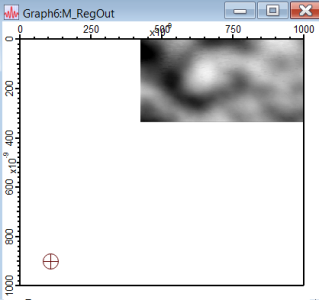

but this seems to fail badly, with the image translated way up and to the right, very far from registration, image attached.

I figured maybe a too-coarse grid for the optimization was responsible, so I tried the following command with /PRDL=1 flag. This outputs a translation of 1 pixel that doesn't appear adequate to me, but it's a little hard to tell given that I don't know a priori what the perfect registration is. I suspect the algorithm is not finding the correct minimum but it is hard to prove this to myself.

ImageRegistration/CONV=1/TRNS={1,1,1}/ROT={0,0,0}/REFM=1/TSTM=1/PRDL=1 testMask=ImageMask1 refMask=ImageMask2 testWave=M_OffsetImage, refWave=neg26C_40C_end STATUS - Initializing... STATUS - Optimizing... STATUS - Creating output... dx= +8.300902293E-01, dy= -1.187434748E+00, dz= +0.000000000E+00 a11= +1.000000000E+00, a12= +0.000000000E+00, a13= +0.000000000E+00 a21= +0.000000000E+00, a22= +1.000000000E+00, a23= +0.000000000E+00 a31= +0.000000000E+00, a32= +0.000000000E+00, a33= +1.000000000E+00 phi= +0.000000000E+00, thet= +0.000000000E+00, psi= +0.000000000E+00 lbd= +0.000000000E+00, gam= +0.000000000E+00 $err= +2.870310102E-14, $snr= -7.274063583E+00 dB

I also tried /PRDL=2 but this leads to bad results, just like the default /PRDL=4 I implicitly used earlier.

Does anyone have tips for me to get this working reliably with this sort of masked data? Is this algorithm really only going to work well if the data is more identical than what I am using here?

Thanks,

Brandon

Hello Brandon,

It seems to me that you are going into a fair amount of trouble in attempting to register images that have insufficient similarities for the purpose of ImageRegistration. In order to investigate this in more detail, it would be best if you could send an experiment containing the images and the various commands that you were using to support@wavemetrics.com.

A.G.

WaveMetrics, Inc.

June 13, 2022 at 06:18 pm - Permalink

The first two images you show are almost identical except for the translational offset and the black area in the middle. Are they different images? It must be possible to get the translation offset between those two images.

I have never used ImageRegistration, but you could try a user defined fit function with two input parameters xOffset and yOffset. I imagine the main difficulty would be if your offset is larger than the size of the features in your image, because that could make the fit get stuck in a local minimum. An alternative would be a simple brute-force approach testing 100 x 100 different x and y offsets. But subtracting and summing with FastOP or MatrixOP is so fast it should be doable.

June 14, 2022 at 08:49 am - Permalink

How many images are you considering? A few or a few hundred? In the former case, I might suggest a manual (brute force) approach. Find a feature or features that remain consistent on all images. Set up a routine to step through each image and add a marker point on the feature(s). Use the marker point(s) to set the alignment across all images.

June 14, 2022 at 09:32 am - Permalink

ImageRegistration handles more than just translation so the manual positioning of some anchor points may not satisfy all the registration requirements.

June 14, 2022 at 09:35 am - Permalink

Sorry for the delay. I thought of an obvious approach to see if I just got lucky with my initial rough XY-translation into approximate registration and there was little further optimizing to do. I tried just replicating the procedure but with a slightly different initial XY-translation to see if ImageRegistration moved the test image to the same place. It did not, so that must mean that ImageRegistration is not working on my particular images, at least with the parameters I chose.

To Igor, I can accept your point that these images are not sufficiently similar, but they don't need to be identical, right? The example in the reference paper is medical tomography where every slice should be slightly different. Maybe my images aren't sufficiently similar, but the background is very similar and I mask out the parts that don't match closely.

To Oleytkin, the images are not identical, they were taken 5 hours apart, but the background substrate has nominally the same topography. The hole in the middle which I mask out changes a lot. Your brute force approach does seem like it would work here, and I might try it. It might be difficult to systematize given the need to exclude certain features. My goal eventually was to do this for stacks of images to generate difference images and quantify changes over time.

To jjweimar, I don't think my features are sufficiently sharp for this, the background varies smoothly and it also changes a tiny bit between the two images.

I am preparing a new file with only these images and the relevant computation steps to send to support@wavemetrics.com . Thank you for offering to take a look at this, even if the answer is just that the images are too dissimilar for registration this way.

June 14, 2022 at 11:33 am - Permalink

So when I prepared a standalone file with just this computation I must have done something differently because the test image registration converges to ~same offset from different starting offset positions, which would indicate ImageRegistration is working for me now. I don't know where the mistake is in the original file, but I'll find it.

Thanks all.

June 14, 2022 at 12:24 pm - Permalink

My comment on setting reference points was to do the image alignment brute force.

Another idea strikes me. Threshold the images in a manner that will accent the key features and remove the undesired background to a flat (zero) value. Do image registration on the thresholded images. Use the reported values to align the sources.

June 14, 2022 at 12:38 pm - Permalink